Table of contents

A rate limiter is a common component in system design that helps control the rate at which certain operations or requests are allowed to occur. It acts as a mechanism to prevent overloading a system by limiting the number of requests or actions processed within a specified time period.

A rate limiter is employed to regulate the flow of traffic originating from a client or service. Within the realm of HTTP, this mechanism restricts the number of client requests permitted within a designated timeframe. If the count of API requests surpasses the threshold set by the rate limiter, any surplus calls are obstructed.

Algorithms For Rate Limiting

Rate limiting is a crucial technique used in system design to control the rate at which certain operations or requests are allowed to be processed. It helps protect systems from abuse, prevents resource exhaustion, and ensures fair usage among users.

Leaking Bucket Algorithm

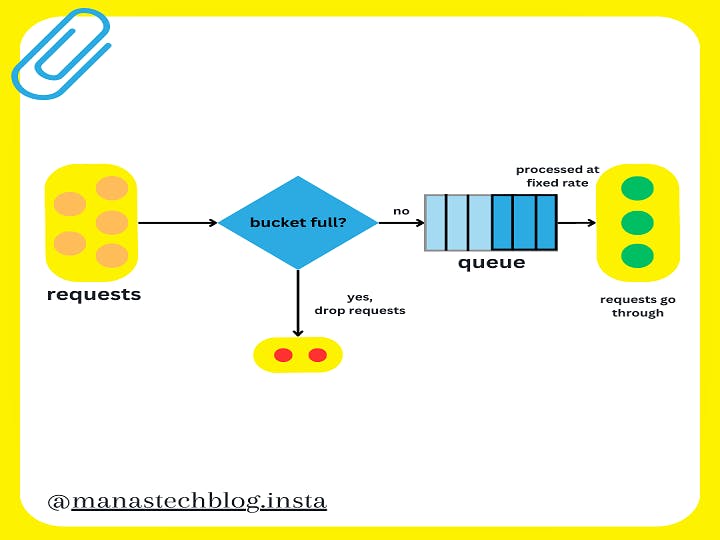

The Leaking Bucket Algorithm is a rate limiting technique used to control the rate at which requests or operations are processed. It operates by imagining a Leaking bucket where requests are poured into the bucket, and if the bucket overflows, excess requests are either rejected or handled separately.

The Leaking bucket algorithm is similar to the token bucket algorithm but works in the opposite manner.

Here, requests are processed at a fixed rate, and any excess requests are held in a "bucket" until they can be processed.

If the bucket overflows, the requests are either rejected or handled separately.

This algorithm provides a more consistent rate limiting approach, regardless of request size.

Here's a detailed explanation of how the token bucket algorithm works:

Initialization:

Define the maximum capacity of the bucket, which represents the maximum number of requests that can be processed within a specific time frame.

Set the leak rate, which determines the rate at which requests are drained or processed from the bucket.

Arrival of Requests:

As requests arrive, they are added to the bucket.

If the bucket is not full, the requests are accepted and processed immediately.

If the bucket is already full and cannot accommodate additional requests, they are either rejected or handled separately based on the system's configuration.

Processing Requests:

At regular intervals, the bucket "leaks" or processes a fixed number of requests, determined by the leak rate.

The leaked requests are removed from the bucket, creating space for new requests to be added.

Request Overflow:

If the rate at which requests arrive is higher than the rate at which requests are processed (leak rate), the bucket will eventually overflow.

When the bucket overflows, excess requests are either rejected or subjected to a different handling mechanism, such as being placed in a queue or delayed until the bucket has space again.

The leaking bucket algorithm takes two parameters:

Bucket size: It is equal to the queue size. The queue holds the requests to be processed at a fixed rate.

Outflow rate: It defines how many requests can be processed at a fixed rate, usually in seconds.

The Leaking Bucket Algorithm provides a consistent rate limiting mechanism. It allows the system to process requests at a fixed rate, ensuring that the rate doesn't exceed the configured leak rate. This helps in preventing resource exhaustion and maintaining a controlled flow of requests.

The algorithm is effective in handling bursty traffic since it allows requests to accumulate in the bucket during idle periods and processes them at a fixed rate. It ensures fair resource allocation and protects the system from being overwhelmed by sudden spikes in traffic.

However, it's important to note that the Leaking Bucket Algorithm may introduce some delay in processing requests, especially if the request arrival rate is significantly higher than the leak rate. It may also require additional mechanisms to handle scenarios such as prioritization or handling different types of requests.

Overall, the Leaking Bucket Algorithm is a reliable rate limiting technique that offers control, fairness, and protection against excessive traffic, making it suitable for various system design scenarios.

Advantages:

Smooth Traffic Control: The Leaking Bucket Algorithm provides a smooth and controlled flow of requests by processing them at a fixed rate. It helps prevent sudden spikes or overwhelming bursts of traffic, ensuring stability and predictability in system performance.

Fair Resource Allocation: By limiting the rate at which requests are processed, the algorithm promotes fair resource allocation among users. It prevents any particular user or request type from monopolizing system resources, ensuring a balanced distribution.

Protection Against Resource Exhaustion: The algorithm protects the system from resource exhaustion by regulating the rate at which requests are processed. It prevents overloading of servers or network resources, maintaining system availability and performance.

Simple Implementation: The Leaking Bucket Algorithm is relatively simple to understand and implement. It doesn't require complex calculations or intricate logic, making it accessible to developers.

Disadvantages:

Potential Delay in Response: If the rate of incoming requests exceeds the leak rate, the algorithm may introduce some delay in processing requests. This delay can result in increased response times for users during high traffic periods.

Inefficient Handling of Bursts: The Leaking Bucket Algorithm may not efficiently handle sudden bursts of requests. If a large number of requests arrive simultaneously, the algorithm processes them at a fixed rate, potentially causing a backlog and increased response times.

Difficulty in Handling Varying Traffic Patterns: The algorithm operates at a fixed processing rate, which may not be ideal for systems with highly variable traffic patterns. It may struggle to adapt to sudden changes in request rates or accommodate uneven loads effectively.

Limited Control over Prioritization: The Leaking Bucket Algorithm does not inherently provide mechanisms for prioritizing or differentiating between different types of requests. Additional techniques or modifications may be required to handle prioritization requirements within the system.

When considering the Leaking Bucket Algorithm, it's essential to evaluate its advantages and disadvantages in the context of the specific system requirements. Factors such as expected traffic patterns, response time requirements, burst handling capabilities, and prioritization needs should be taken into account to determine if the algorithm is suitable for the given system design.

Summarizing Up

In summary, rate limiters offer a range of benefits including preventing overload, protecting against attacks, ensuring fair resource allocation, improving scalability, enhancing stability and availability, safeguarding third-party integrations, and enabling usage-based billing. These benefits contribute to the overall reliability, performance, and security of a system.

The Leaking Bucket Algorithm is a rate limiting technique that regulates the flow of requests. It processes requests at a fixed rate, preventing resource exhaustion and ensuring system stability. Requests are poured into a virtual bucket, and if the bucket overflows, excess requests are either rejected or handled separately. The algorithm offers smooth traffic control, fair resource allocation, and protection against overload. However, it may introduce response delays during high traffic, struggle with sudden bursts, and require additional mechanisms for prioritization. Overall, the Leaking Bucket Algorithm provides controlled request processing, preventing system overload while promoting fairness and stability.