A rate limiter is a common component in system design that helps control the rate at which certain operations or requests are allowed to occur. It acts as a mechanism to prevent overloading a system by limiting the number of requests or actions processed within a specified time period.

A rate limiter is employed to regulate the flow of traffic originating from a client or service. Within the realm of HTTP, this mechanism restricts the number of client requests permitted within a designated timeframe. If the count of API requests surpasses the threshold set by the rate limiter, any surplus calls are obstructed.

High-level Architecture

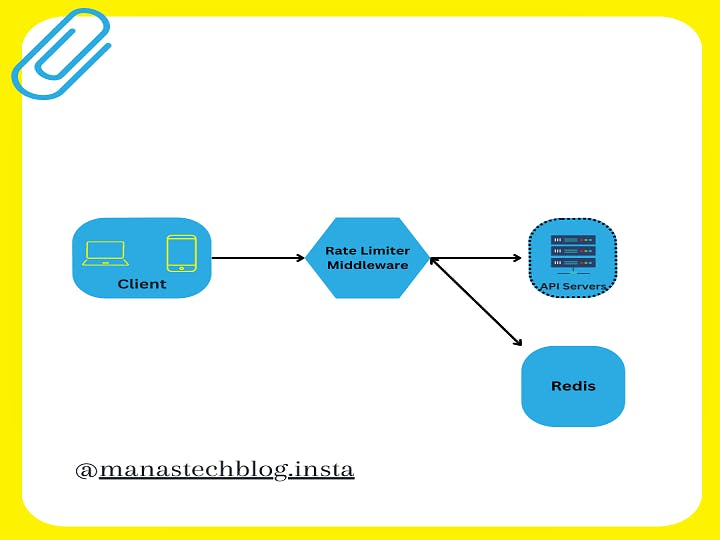

A rate limiter is a crucial component in many software systems to control the rate at which certain operations or requests are allowed. It helps protect the system from being overwhelmed by excessive traffic or abusive behavior. Let's discuss the high-level architecture of a rate limiter system, including its components: rate limiter middleware, API servers, Redis, and the client.

Rate Limiter Middleware: The rate limiter middleware is responsible for intercepting incoming requests and determining whether they should be allowed or rate-limited. It sits between the client and the API server, acting as a gatekeeper. The middleware checks the request against predefined rules and applies rate limiting policies.

API Servers: API servers are the main components that process client requests and generate responses. They handle the core logic and functionality of the system. The rate limiter middleware is typically integrated into the API servers to enforce rate limiting rules before forwarding requests for processing.

Redis: Redis is a popular in-memory data store used for caching and high-performance data operations. In the context of a rate limiter, Redis is often utilized to store and manage rate limiting information. It provides fast read/write operations and supports key-value pairs and data structures, making it suitable for rate limiting scenarios.

Redis can be used to store the following information related to rate limiting:

Tokens or counters: Redis can maintain a token bucket or counter for each client or client IP address. Tokens or counts can be decremented with each request, and the rate limiter middleware can check the remaining count to determine if the request should be allowed or limited.

Time windows: Redis can store timestamps or expiration times associated with each client's requests. The middleware can calculate the number of requests made within a specific time window to enforce rate limits.

Client: The client represents the entity making requests to the API servers. It could be a web browser, a mobile app, or any other system that interacts with the API. The client sends requests to the API servers, and the rate limiter middleware intercepts these requests to determine whether they should be allowed or rate-limited based on the defined policies.

The flow of a request through the rate limiter system typically follows these steps:

The client sends a request to the API server.

The rate limiter middleware intercepts the request and checks the client's rate limiting status.

The middleware consults Redis to determine if the client has exceeded the defined rate limits.

If the request is within the allowed limits, the middleware forwards it to the API server for processing.

If the request exceeds the rate limits, the middleware can either reject the request or apply some form of rate limiting strategy (e.g., delaying the request or returning an error response).

The API server processes the request and generates a response.

The response is sent back to the client.

By using rate limiting, the system can maintain stability, prevent abuse, and ensure fair resource allocation. The combination of the rate limiter middleware, API servers, Redis, and the client creates an effective architecture for enforcing rate limits in a distributed system.

Detailed Design Of Rate Limiter

Rate Limiting Rules

Rate limiting rules are created based on the specific requirements and policies of the system. These rules define the criteria for determining the allowed rate of requests for each client or client IP address. The rules can be customized to meet the needs of the system, such as limiting the number of requests per minute, hour, or day, or setting different limits for different types of requests or clients.

The rate limiting rules can be created and managed in various ways, depending on the implementation:

Configuration files: Rules can be defined in configuration files specific to the rate limiter middleware or the API servers. These files typically contain settings and parameters that determine the rate limits and associated policies.

Code-based configuration: Some systems allow rate limiting rules to be specified directly in the code. Developers can write code snippets that define the rules and policies programmatically.

Rate limiting middleware/API: Rate limiting rules can be created and managed through dedicated rate limiting middleware or API endpoints. These components provide an interface for administrators or developers to configure and update the rules dynamically.

As for where the rules are stored, it depends on the specific implementation and architecture of the rate limiter system. Some common storage options include:

Database: The rate limiting rules can be stored in a dedicated database, such as MySQL, PostgreSQL, or MongoDB. This allows for efficient storage, retrieval, and management of the rules.

Configuration files: As mentioned earlier, the rules can be stored in configuration files that are read by the rate limiter middleware or API servers. These files can be stored on disk or in a version control system for easy access and version management.

Key-value store: In some cases, the rate limiting rules may be stored in a key-value store like Redis or Memcached. This allows for quick and efficient retrieval of the rules during runtime.

The choice of storage depends on factors such as performance requirements, scalability, ease of management, and the overall architecture of the rate limiter system.

Examples of Rate Limiting Rules

Request Rate Limit: Set the maximum number of requests a client can make within a specific time window. For instance, limit a client to 100 requests per minute or 1000 requests per hour.

# Request Rate Limit - type: request limit: 100 # Maximum number of requests interval: 60 # Time interval in seconds (e.g., 60 seconds = 1 minute)IP-based Rate Limit: Impose rate limits based on the IP address of the client. This prevents a single IP address from overwhelming the system. For example, restrict an IP address to 50 requests per minute.

# IP-based Rate Limit - type: ip limit: 50 # Maximum number of requests interval: 60 # Time interval in secondsAPI Endpoint Rate Limit: Apply different rate limits for different API endpoints. For instance, limit the number of requests to the "/login" endpoint to 5 requests per minute, while allowing 100 requests per minute to the "/search" endpoint.

# API Endpoint Rate Limit - type: endpoint endpoint: /login limit: 5 # Maximum number of requests interval: 60 # Time interval in seconds - type: endpoint endpoint: /search limit: 100 # Maximum number of requests interval: 60 # Time interval in secondsBurst Limit: Set a maximum number of requests allowed in a short burst of time, allowing temporary spikes in traffic. For example, allow a burst of 10 requests within a 5-second window, but maintain an average rate limit of 50 requests per minute.

# Burst Limit - type: burst limit: 10 # Maximum number of requests in burst interval: 5 # Time interval in secondsUser-based Rate Limit: Implement rate limits based on user accounts. This can be useful in systems where users have different access levels or subscription tiers. For example, limit free users to 20 requests per hour, while premium users have a limit of 100 requests per hour.

# User-based Rate Limit - type: user user: free_user limit: 20 # Maximum number of requests interval: 3600 # Time interval in seconds (e.g., 3600 seconds = 1 hour) - type: user user: premium_user limit: 100 # Maximum number of requests interval: 3600 # Time interval in secondsToken-based Rate Limit: Introduce rate limiting based on authentication tokens or API keys. Each token can have its own rate limit associated with it. This allows more granular control over different client applications or third-party integrations.

# Token-based Rate Limit - type: token token: API_TOKEN_123 limit: 50 # Maximum number of requests interval: 60 # Time interval in seconds

Exceeding The Rate Limit

When a request is rate-limited, there are several approaches to handling it. Here are a few common strategies:

Delayed Retry: In this approach, when a request is rate-limited, the server responds with a "Rate Limit Exceeded" error code (such as HTTP 429). The client can then implement a retry mechanism, where it waits for a certain period (known as the "retry-after" duration) and retries the request after the delay. The delay allows the client to respect the rate limiting restrictions and avoid overwhelming the system.

Error Response: When a request is rate-limited, the server can respond with an error response immediately, indicating that the rate limit has been exceeded. The client can handle this error response appropriately, either by displaying an error message to the user or by implementing a fallback behavior.

Request Queuing: Instead of immediately rejecting the rate-limited requests, they can be added to a queue for processing later. The server can prioritize the queued requests based on their arrival time or other criteria. Once the rate limit allows, the requests from the queue can be processed in the order they were received. This approach helps ensure fairness and allows the system to process the requests when the rate limit is no longer exceeded.

Graceful Degradation: In situations where rate limiting occurs due to excessive load or system issues, the server can respond with a degraded or simplified version of the requested data or functionality. This approach aims to provide a partial response or fallback content to the client instead of completely blocking the request.

The choice of handling strategy depends on the specific requirements and behavior of your system. It's important to consider the impact on user experience, system resources, and the goals of rate limiting when deciding how to handle rate-limited requests.

Rate Limiter Headers

Rate limiter headers are HTTP headers used to communicate rate limiting information between the server and the client. These headers provide valuable details about the rate limiting status of a request and help the client and server coordinate their behavior. Here are some commonly used rate limiter headers:

X-RateLimit-Limit: This header specifies the maximum number of requests allowed within a certain time period. It indicates the rate limit imposed by the server. For example, "X-RateLimit-Limit: 100" means that the client is allowed to make 100 requests within the specified time period.

X-RateLimit-Remaining: This header indicates the number of requests remaining before the rate limit is reached. It provides real-time feedback to the client about the remaining capacity for making requests. For example, "X-RateLimit-Remaining: 50" means that the client can make 50 more requests before hitting the rate limit.

X-RateLimit-Reset: This header specifies the time when the rate limit will reset or refresh. It is often represented as a Unix timestamp or the number of seconds until the reset. The client can use this information to determine how long it needs to wait before the rate limit is reset and more requests can be made.

Retry-After: This header indicates the duration in seconds that the client should wait before retrying a request that has been rate-limited. It is typically used in conjunction with the HTTP status code 429 (Too Many Requests) and provides guidance to the client on when it can try again. The client can honor this header and implement a delayed retry mechanism.

These headers are usually added by the server in the response to a rate-limited request. The client can inspect these headers and adjust its behavior accordingly. For example, if the X-RateLimit-Remaining header is close to zero, the client may decide to slow down or pause its requests to respect the rate limiting restrictions.

It's important to note that the exact names and formats of these headers can vary depending on the implementation and conventions used in the system. Additionally, rate limiter headers are not limited to these examples, and custom headers can be defined to convey additional rate limiting information specific to your system.

Summarizing Up

Rate limiting is a crucial mechanism to control the rate of requests and protect systems from abuse or overload. It involves intercepting requests using rate limiter middleware, which enforces predefined rules. API servers process the requests, while Redis often serves as a high-performance storage solution for rate limiting information. Rate limiting rules can be created based on criteria like request rates, IP addresses, user accounts, and API endpoints. Requests that exceed rate limits can be handled through delayed retries, error responses, request queuing, or graceful degradation. Rate limiter headers, such as X-RateLimit-Limit, X-RateLimit-Remaining, X-RateLimit-Reset, and Retry-After, communicate rate limiting information between servers and clients.